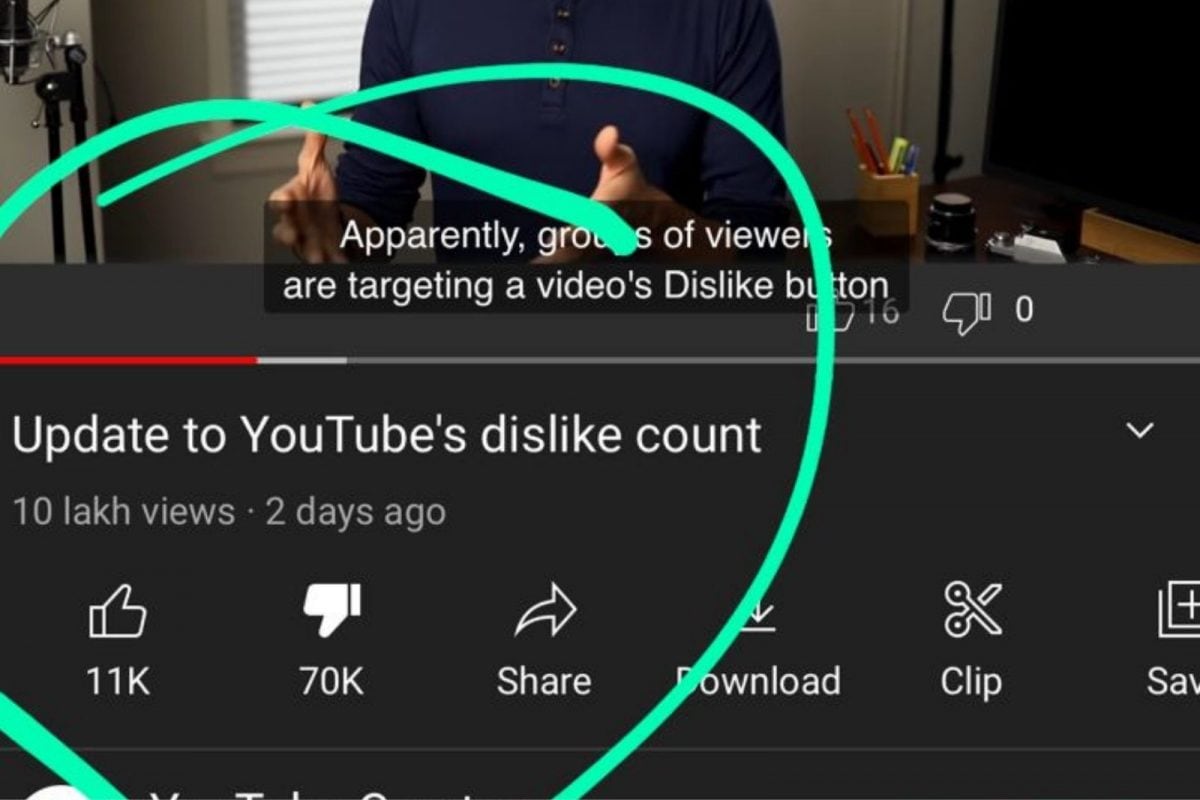

You’re not alone if you’ve ever felt like it’s tough to “Un-train” YouTube’s algorithm from proposing a certain sort of video once it’s slipped into your recommendations. In reality, getting YouTube to comprehend your tastes may be more complicated than you think. According to a new Mozilla study, one key issue is that YouTube’s in-app controls, such as the “Dislike” button, are mainly worthless as a tool for regulating suggested material. These buttons, according to the research, “Prevent less than half of all unwelcome algorithmic recommendations.

”

Mozilla researchers used data from RegretsReporter, a browser extension that allows users to “Give” their suggestion data for use in studies like this one. Overall, the analysis was based on millions of recommended movies and anecdotal reports from thousands of people.

Mozilla investigated the efficacy of four potential controls: the thumbs-down “Dislike” button, “Dot interested,” “Don’t recommend channel,” and “Delete from watch history.” The researchers discovered that these were effective to various degrees, but that the overall impact was “Minimal and inadequate.”

The most effective rule was “Don’t recommend from the channel,” which stopped 43 percent of unwanted recommendations, while “Not interested” was the least successful, preventing only approximately 11 percent. The “Dislike” button had a similar percentage of 12 percent, while “Remove from watch history” removed roughly 29 percent.

Mozilla’s researchers stated in their paper that study participants would sometimes go to considerable measures to avoid unwelcome recommendations, such as watching movies while signed out or connected to a VPN. According to the researchers, the study underlines the need for YouTube to better explain its controls to users and provide more proactive options for people to define what they want to see.

“The way YouTube and many other platforms operate is that they rely on a lot of passive data collecting to infer what your tastes are,” explains Becca Ricks, a senior researcher at Mozilla who co-wrote the report. “However, it’s a bit of a paternalistic way of operating in that you’re making decisions on people’s behalf.” You might ask people what they want to do on the platform rather than just monitoring what they do.

”

Mozilla’s research comes as major platforms face rising pressure to make their algorithms more transparent. Legislators in the United States have proposed legislation to limit “Opaque” recommendation algorithms and hold firms accountable for algorithmic bias. The European Union has a significant advantage.

The recently passed Digital Services Act requires platforms to explain how recommendation algorithms function and to make them available to outside researchers.